tmp

Jupyterlab

jupyter lab 실행시 no kernel현상

(my-torch-env3.6) jhseo@jhseo-Inspiron-7559:~/anaconda3/envs/my-torch-env3.6$ jupyter lab

[W 2021-03-26 15:32:34.434 ServerApp] The module 'jupyter_kite' could not be found. Are you sure the extension is installed?

[I 2021-03-26 15:32:34.444 ServerApp] jupyterlab | extension was successfully linked.

[W 2021-03-26 15:32:34.460 ServerApp] The module 'ipyparallel.nbextension' could not be found. Are you sure the extension is installed?

[I 2021-03-26 15:32:34.460 ServerApp] nbclassic | extension was successfully linked.

[I 2021-03-26 15:32:34.483 ServerApp] The port 8888 is already in use, trying another port.

[I 2021-03-26 15:32:34.488 LabApp] JupyterLab extension loaded from /home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/jupyterlab

[I 2021-03-26 15:32:34.488 LabApp] JupyterLab application directory is /home/jhseo/anaconda3/envs/my-torch-env3.6/share/jupyter/lab

[I 2021-03-26 15:32:34.491 ServerApp] jupyterlab | extension was successfully loaded.

[I 2021-03-26 15:32:34.494 ServerApp] nbclassic | extension was successfully loaded.

[I 2021-03-26 15:32:34.495 ServerApp] Serving notebooks from local directory: /home/jhseo/anaconda3/envs/my-torch-env3.6

[I 2021-03-26 15:32:34.495 ServerApp] Jupyter Server 1.4.1 is running at:

[I 2021-03-26 15:32:34.495 ServerApp] http://localhost:8889/lab?token=ce3eb05e0eff2d69b831eb6bd752327a111e3a4ad8cd4809

[I 2021-03-26 15:32:34.495 ServerApp] or http://127.0.0.1:8889/lab?token=ce3eb05e0eff2d69b831eb6bd752327a111e3a4ad8cd4809

[I 2021-03-26 15:32:34.495 ServerApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[C 2021-03-26 15:32:34.501 ServerApp]

To access the server, open this file in a browser:

file:///home/jhseo/.local/share/jupyter/runtime/jpserver-23664-open.html

Or copy and paste one of these URLs:

http://localhost:8889/lab?token=ce3eb05e0eff2d69b831eb6bd752327a111e3a4ad8cd4809

or http://127.0.0.1:8889/lab?token=ce3eb05e0eff2d69b831eb6bd752327a111e3a4ad8cd4809

Opening in existing browser session.

[I 2021-03-26 15:32:39.442 LabApp] Build is up to date

TermSocket.open: 1

[I 2021-03-26 15:32:39.803 ServerApp] New terminal with specified name: 1

TermSocket.open: Opened 1

Websocket closed

[W 2021-03-26 15:32:40.188 ServerApp] Notebook MolTrans_Study.ipynb is not trusted

[I 2021-03-26 15:32:40.708 ServerApp] Kernel started: 37b30e6c-144e-47d8-8c7c-ec32ec2edded

Traceback (most recent call last):

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel_launcher.py", line 15, in <module>

from ipykernel import kernelapp as app

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/__init__.py", line 2, in <module>

from .connect import *

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/connect.py", line 11, in <module>

from IPython.core.profiledir import ProfileDir

ModuleNotFoundError: No module named 'IPython.core.profiledir'

[I 2021-03-26 15:32:43.709 ServerApp] AsyncIOLoopKernelRestarter: restarting kernel (1/5), new random ports

Traceback (most recent call last):

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel_launcher.py", line 15, in <module>

from ipykernel import kernelapp as app

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/__init__.py", line 2, in <module>

from .connect import *

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/connect.py", line 11, in <module>

from IPython.core.profiledir import ProfileDir

ModuleNotFoundError: No module named 'IPython.core.profiledir'

-[I 2021-03-26 15:32:46.716 ServerApp] AsyncIOLoopKernelRestarter: restarting kernel (2/5), new random ports

Traceback (most recent call last):

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel_launcher.py", line 15, in <module>

from ipykernel import kernelapp as app

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/__init__.py", line 2, in <module>

from .connect import *

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/connect.py", line 11, in <module>

from IPython.core.profiledir import ProfileDir

ModuleNotFoundError: No module named 'IPython.core.profiledir'

[I 2021-03-26 15:32:49.723 ServerApp] AsyncIOLoopKernelRestarter: restarting kernel (3/5), new random ports

Traceback (most recent call last):

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel_launcher.py", line 15, in <module>

from ipykernel import kernelapp as app

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/__init__.py", line 2, in <module>

from .connect import *

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/connect.py", line 11, in <module>

from IPython.core.profiledir import ProfileDir

ModuleNotFoundError: No module named 'IPython.core.profiledir'

[I 2021-03-26 15:32:52.735 ServerApp] AsyncIOLoopKernelRestarter: restarting kernel (4/5), new random ports

Traceback (most recent call last):

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel_launcher.py", line 15, in <module>

from ipykernel import kernelapp as app

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/__init__.py", line 2, in <module>

from .connect import *

File "/home/jhseo/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/ipykernel/connect.py", line 11, in <module>

from IPython.core.profiledir import ProfileDir

ModuleNotFoundError: No module named 'IPython.core.profiledir'

[W 2021-03-26 15:32:55.750 ServerApp] AsyncIOLoopKernelRestarter: restart failed

[W 2021-03-26 15:32:55.750 ServerApp] Kernel 37b30e6c-144e-47d8-8c7c-ec32ec2edded died, removing from map.

- 여러 키워드로 구글링해봄.

ImportError: cannot import name 'generator_to_async_generator'

- 해결

- In my case with python3.7, I uninstalled both ipython and prompt_toolkit as shown by @stas00 and just installed ipython so that it installs the compatible prompt_toolkit itself

pip uninstall -y ipython prompt_toolkit

pip install ipython

PyTorch

torch.nn.BCELoss:

- Binary Cross Entropy

- target - output사이의 bce측정기준 생성. 참고

Tensor:

- tensors are

(kind of)like np.arrays. - All tensors are immutable like Python numbers and strings:

- you can never update the contents of a tensor, only create a new one.

torch.Tensor class중 backward function은 Computes the gradient of current tensor w.r.t. graph leaves.

w.r.t.:with regard to(..에 관해서)

默认情况下是没有grad梯度属性的。检查一个tensor是否有grad属性可以通过tensor.requires_grad来查看,如果tensor中有grad属性,则返回Ture,反之返回False。

scalar: …

torch.optim은 다양한 최적화 알고리즘을 구현하는 패키지입니다. 가장 일반적으로 사용되는 방법은 이미 지원되고 있으며 인터페이스는 충분히 일반적이므로 향후 더 복잡한 방법도 쉽게 통합 할 수 있습니다.

Backward function in PyTorch

- Thus, by default,

backward()is called on a scalar tensor and expects no arguments. 출처

Variable API has been deprecated

- How to declare a scalar as a parameter in pytorch?

x = torch.randn(1,1, requires_grad=True)

- Variable API has been deprecated. official docs

CUDA out of memory

- Error message

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-18-866d0e46d218> in <module>

2 #biosnap interaction times 1e-6, flat, batch size 64, len 205, channel 3, epoch 50

3 s = time()

----> 4 model_max, loss_history = main(1, 5e-6)

5 e = time()

6 print(e-s)

<ipython-input-17-36825a101074> in main(fold_n, lr)

113 model.train()

114 for i, (d, p, d_mask, p_mask, label) in enumerate(training_generator):

--> 115 score = model(d.long().cuda(), p.long().cuda(), d_mask.long().cuda(), p_mask.long().cuda())

116

117 label = Variable(torch.from_numpy(np.array(label)).float()).cuda()

~/anaconda3/envs/my-torch-env3.6/lib/python3.6/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

--> 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

~/anaconda3/envs/my-torch-env3.6/MolTrans/models.py in forward(self, d, p, d_mask, p_mask)

90

91 # repeat to have the same tensor size for aggregation

---> 92 d_aug = torch.unsqueeze(d_encoded_layers, 2).repeat(1, 1, self.max_p, 1) # repeat along protein size

93 p_aug = torch.unsqueeze(p_encoded_layers, 1).repeat(1, self.max_d, 1, 1) # repeat along drug size

94

RuntimeError: CUDA out of memory. Tried to allocate 640.00 MiB (GPU 0; 3.95 GiB total capacity; 2.49 GiB already allocated; 587.12 MiB free; 2.53 GiB reserved in total by PyTorch)

TORCH.UTILS.DATA

-

At the heart of PyTorch data loading utility is the torch.utils.data.DataLoader class. It represents a Python iterable over a dataset, with support for

-

map-style and iterable-style datasets,

-

customizing data loading order,

-

automatic batching,

-

single- and multi-process data loading,

-

automatic memory pinning.

-

DataLoader(dataset, batch_size=1, shuffle=False, sampler=None,

batch_sampler=None, num_workers=0, collate_fn=None,

pin_memory=False, drop_last=False, timeout=0,

worker_init_fn=None, *, prefetch_factor=2,

persistent_workers=False)

CLASS torch.utils.data.Dataset

- An abstract class representing a Dataset.

MolTrans – FCS

- subword-nmt

- About

- Unsupervised Word Segmentation for Neural Machine Translation and Text Generation

- Installation

pip install subword-nmt

- About

Python

enumerate

- Return an enumerate object. iterable must be a sequence. returns a tuple containing a count.

seasons = ['Spring', 'Summer', 'Fall', 'Winter']

list(enumerate(seasons)) # [(0, 'Spring'), (1, 'Summer'), (2, 'Fall'), (3, 'Winter')]

list(enumerate(seasons, start=1)) # [(1, 'Spring'), (2, 'Summer'), (3, 'Fall'), (4, 'Winter')]

What is the difference between arguments and parameters?

- Parameters are defined by the names that appear in a function definition, whereas arguments are the values actually passed to a function when calling it. Parameters define what types of arguments a function can accept.

For example, given the function definition:

def func(foo, bar=None, **kwargs):

pass

foo, bar and kwargs are parameters of func. However, when calling func, for example:

func(42, bar=314, extra=somevar)

the values 42, 314, and some var are arguments.

Role of Underscore(_) in Python

- Use In Interpreter

>>> 54 + 45

99

>>> _

99

>>> 1 + _

100

>>> 2*_

200

- Ignoring Values

## ignoring a value

a, _, b = (1, 2, 3) # a = 1, b = 3

print(a, b)

## ignoring multiple values

## *(variable) used to assign multiple value to a variable as list while unpacking

## it's called "Extended Unpacking", only available in Python 3.x

a, *_, b = (7, 6, 5, 4, 3, 2, 1)

print(a, b)

1 3

7 1

-

Use In Looping

- Naming

-

제일 중요한거.

-

Underscore

(_)can be used to name variables, functions and classes, etc.., -

Single Pre Underscore:- _variable

- Single Pre Underscore is only meant to use for the internal use.

## filename:- my_functions.py def func(): return "datacamp" def _private_func(): return 7>>> from my_functions import * >>> func() 'datacamp' >>> _private_func() Traceback (most recent call last): File "<stdin>", line 1, in <module> NameError: name '_private_func' is not defined -

Signle Post Underscore:- variable_

-

Double Pre Underscores:- __variable

-

Double Pre and Post Underscores:- __variable__

-

- Class attribute vs. Instance attribute

Python glossary

attribute

- A value associated with an object which is referenced by name using dotted expressions. For example, if an object o has an attribute a it would be referenced as o.a.

pep뜻

- PEP 0 – Index of Python Enhancement Proposals

(PEPs)peps

method

- A function which is defined inside a class body.

Machine Learning

AUPRC

- The area under the precision-recall curve

(AUPRC)is a useful performance metric for imbalanced data in a problem setting where you care a lot about finding the positive examples.

AUROC vs. AUPRC

- Usually you would obtain the same conclusion based on both measures. 출처.

- ROC AUC is the area under the curve where x is false positive rate

(FPR)and y is true positive rate(TPR). - PR AUC is the area under the curve where x is recall and y is precision. 출처.

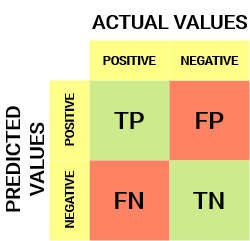

Confusion matrix

- a confusion matrix

(error matrix), is a specific table layout that allows visualization of the performance of an algorithm, typically a supervised learning one.

-

헷갈릴땐

(예측을)True/False 해서(실제값을)Positive/Negative. -

$TP+FN=P$, $FP+TN=N$, $TP+FP=P’$, $FN+TN=N’$.

-

Accuracy: 전체중 참인 예측의 비율 \(\frac{TP+TN}{P+N}\)

-

Error-rate: 전체 관측치 중 실제값과 예측치가 다른 정도 \(\frac{FP+FN}{P+N}=\frac{FP+FN}{(TP+FN)+(FP+TN)}\)

-

Precision: 전체Positive 중 참Positive인 비율.PPV. \(\frac{TP}{P'} =\frac{TP}{TP+FP}\)

-

Recall: 실제값Positive중 참Positive의 비율 \(\frac{TP}{P} = \frac{TP}{TP+FN}\)

-

Sensitivity: 실제값이 True인 관측치 중 예측치가 적중한 정도. TPR. \(\frac{TP}{P}=\frac{TP}{TP+FN}\)

-

Specificity: 실제값이 False인 관측치 중 예측치가 적중한 정도. FPR. \(\frac{TN}{N}=\frac{TN}{FP+TN}\)

$\mathrm{Precision}=\mathrm{PPV} , \mathrm{PPV}\neq \mathrm{FPR}$

$\mathrm{recall} = \mathrm{TPR} = \mathrm{Sensitivity}$

- F1 Score: Precision과 Recall의 조화평균 \(\frac{2*\mathrm{Precision}*\mathrm{Recall}}{\mathrm{Precision}+\mathrm{Recall}} = \frac{2*TP}{2*TP+FP+FN}\)